Table of Contents

What is Docker

Docker is a container platform that allows you to build, test and deploy applications quickly. Let's take an example to break this down. Imagine some people who want to ship their goods to different places, someone wants to ship a car, someone wants to ship a piano or any other electronic items, others want to ship a box, grains, etc. Transporting these goods individually to the target destination is a challenge for the person who is shipping them.

Here comes the idea of putting these goods in a box or Container and then ship this container as a whole. During the traveling phase, the person who's working on the ship or airplane doesn't care about what's inside the container, their job is to put the container on the ship, sail the ship and then deboard the container. This resolved a lot of problems, you don't have to worry about how to transport a car or a piano or anything else, you just put them into a container and ship them.

Similarly, when you create an application and then share it with your friend, your friend is like "Hey, this application is not working on my machine." So even though it works perfectly on your machine, your friend might not have proper dependencies, maybe some files are missing or he is using a different version or a completely different environment, just the code sample is not enough to run the application on a different environment.

So the idea is to put everything which is required to run your application in a box-like structure (called Container), be that your code sample, static website, database, and all other tools. Now you give this container to your friend. This is Docker shipping Container for Code, where Docker is the ship.

How Docker works

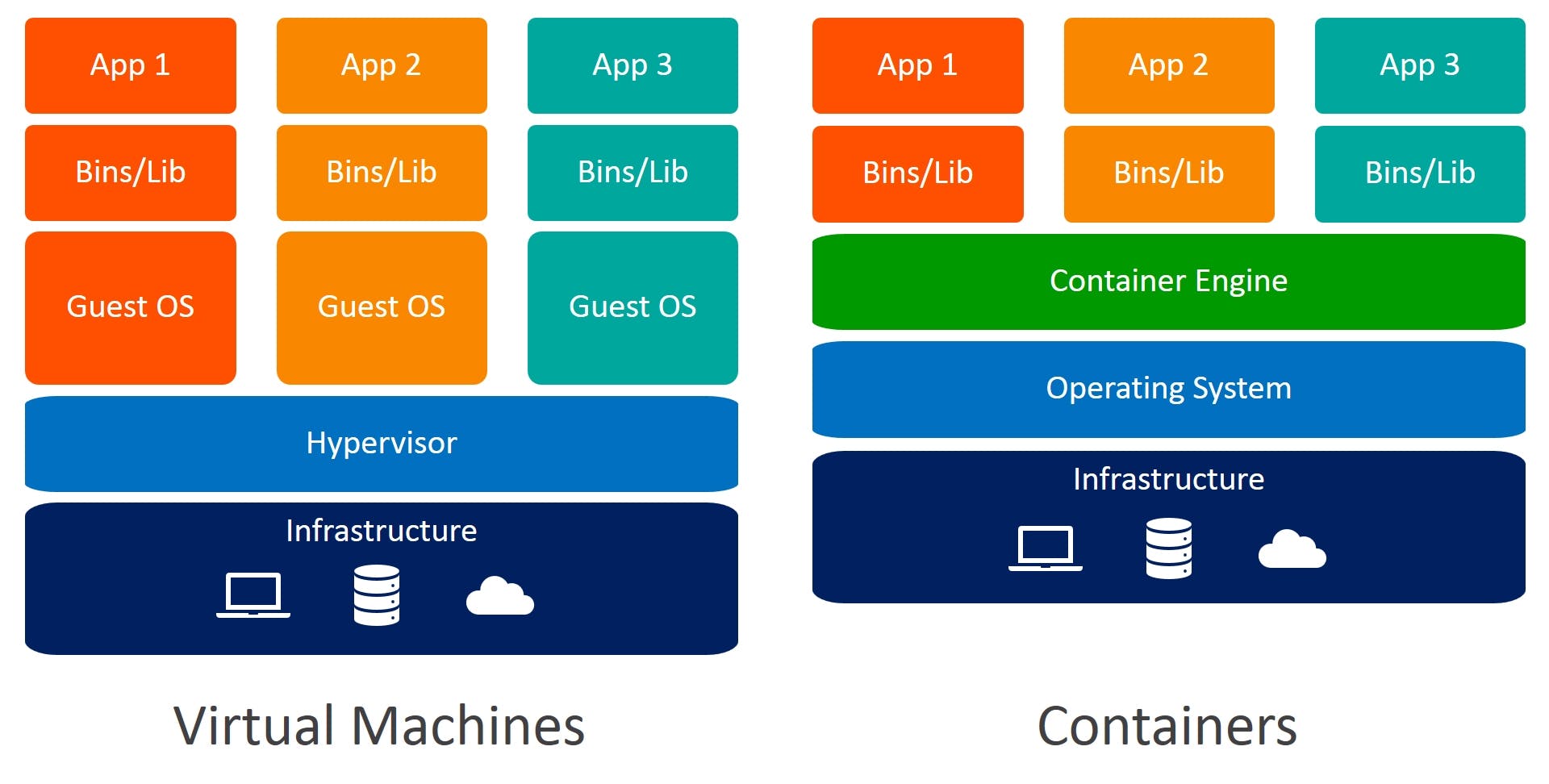

You might have heard about Virtual Machines or tried to Dual Boot your computer by having Windows and Linux within a single machine. In this process, the hard disk space is divided and now you're running two different Operating Systems over different spaces. This process takes a lot of time and sometimes creates wastage of storage if not used systematically. As you can see in the image below, you have your infrastructure, on top of that there is Hypervisor which manages the Operating Systems and different Guest OS are running separately over separate spaces.

Container, on the other hand, does not install the entire OS by dividing hard disk space like VMs, instead, there will be a host OS and you run applications on that OS in isolated environments using Container Engine which is Docker. So basically instead of creating or installing a new OS again and again, Docker is going to run on top of a host OS and run applications in isolated blocks or Containers.

Docker Architecture and Components

Docker Daemon

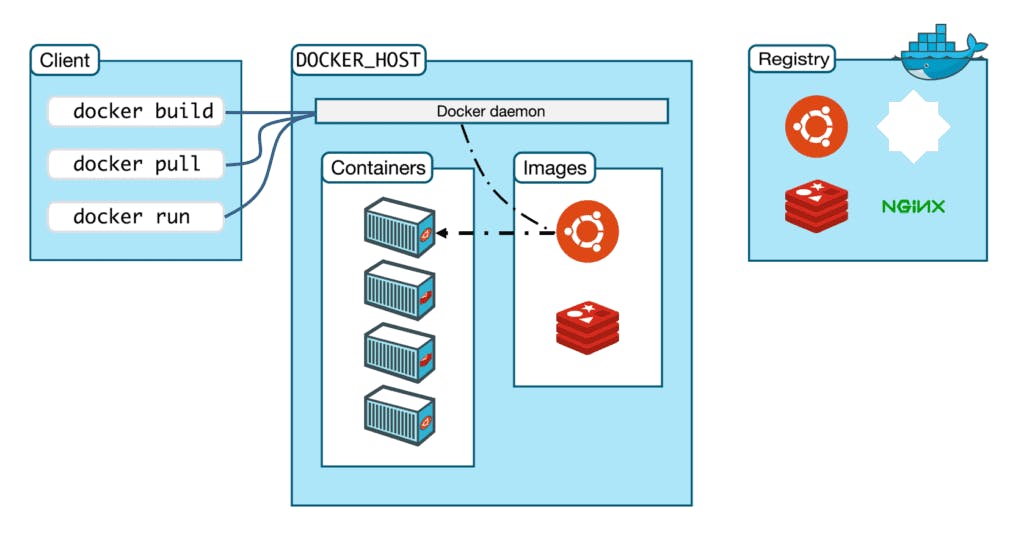

It listens to Docker API requests being made through the Docker client and manages Docker objects such as images, containers, networks, and volumes.

Docker Client

With the help of Docker Clients, you can interact with Docker. It provides a command-line interface (CLI) that allows you to run, and stop application commands to a Docker daemon.

Docker Container

By now you should know what is Docker Container is, it is a running instance of that particular application, it is the actual box where your application is running and in production, these containers run over virtual machines.

Docker Image and Dockerfile

A Docker Image is a file that defines Docker Container. Let's take an example to break this down. Suppose you're a cook and you want to make a dish, for that you have a recipe and in that recipe, you have the list of ingredients and steps to make that dish. When you follow that recipe, a dish is created. Similarly, Docker Image can be created using a recipe like this, and the file that contains this recipe is called Dockerfile.

Dockerfile contains what dependencies you need, code samples, commands you need to run, etc. Once you run a Dockerfile, you create a Docker Image, that image is something you can share with your friend and when your friend runs that image it will create a Docker Container.

So now you don't have to share all the dependencies, node modules, and everything else. Instead, you'll say "Hey, here's a Docker Image. Just run this and you'll come to the exact same state of the application that I'm right now." So basically it defines a Docker Container similar to the concept of a snapshot in Virtual Machine.

Docker Registries

This is where Docker Images are stored, like Docker Hub. For example, if you want to try out MongoDB but you don't want to install it on your computer, what will you do? You'll just say "Docker pull MongoDB". First, it will check does MongoDB image exists on the local computer, no it does not, then it will pull it from Docker Registry/Hub. That way you can run all the things you want in your image.

The image above shows the flow of Docker components. You put all the commands in the Client, Client will talk to Docker Daemon and it way say "Hey Docker Daemon, I need you to run this particular image." Daemon will search it in the images, if it's present, make a container out of it. If it is not present, go to the Registry, download that image and create a container out of it.

Managing Containers

Imagine you are running an e-commerce application in containers. Let's say 100 containers are running at a moment and you are about to host a Flash Sale on your application. Now thousands of people are going to visit your website/application and this will increase the load on the containers and your website will go down.

What we need to do is, when the load increases on the website, we want to automatically increase the number of containers running (in this case from 100 to 200) and distribute the load across them to avoid downtime. And once the sale has ended, the load will also decrease but your containers are still running and increasing your bill for that service. So you also want to decrease the number of containers when not in use.

Let's say some containers crashed while they were running, so you have to restart those containers. Maybe you want to update your application to a newer version but you don't want any downtime during that period. For example, when Instagram roles out any new update, you don't notice any downtime. They update it on a rolling basis while you're using the application, it gets updated.

Doing all these tasks manually requires a lot of effort and it's not efficient at all. This is where Kubernetes comes into the picture.

What is Kubernetes

Kubernetes is a container orchestration system that was open-sourced by Google in 2014. In simple terms, it makes it easier for us to manage containers by automating various tasks. A container orchestration engine is used to automate deploying, scaling, and managing containerized applications on a group of servers.

Kubernetes Architecture

Pods

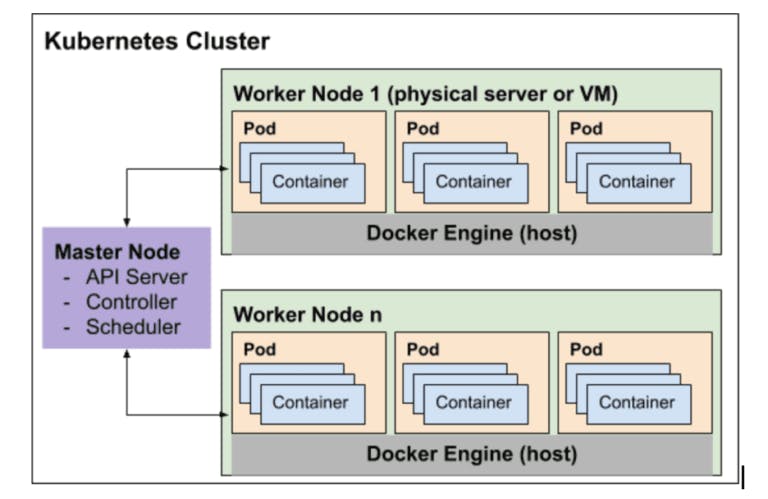

The smallest unit in Docker is a container while in Kubernetes is a "Pod". A Pod runs on a node, this node can be a virtual machine and the pods are going to be running on that virtual machine.

Nodes

Kubernetes runs your workload by placing containers into Pods to run on Nodes. A node may be a virtual or physical machine, depending on the cluster. Each node is managed by the control plane and contains the services necessary to run Pods.

Typically you have several nodes in a cluster; in a learning or resource-limited environment, you might have only one node.

The components on a node include the kubelet, a container runtime, and the kube-proxy.

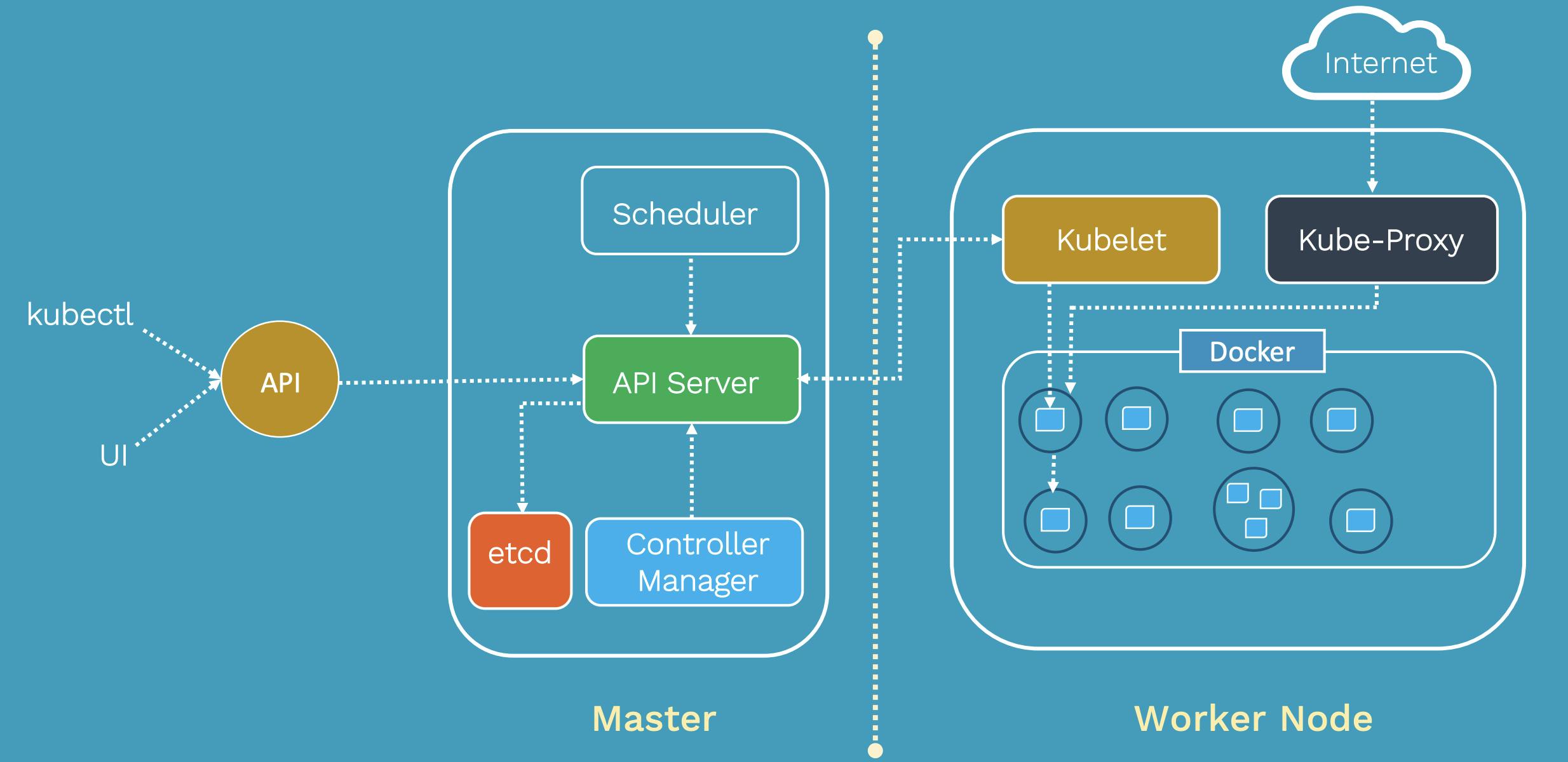

Master Node

It is responsible for managing the whole cluster. It monitors the health check of worker nodes and shows the information about the members of the cluster. For example, if a worker node fails, the master node moves the load to another healthy worker node.

Components of the Master Node

API server: Gatekeeper for the entire cluster, CRUD operations for servers go through the API (kubectl).

Scheduler: It is responsible for physically scheduling Pods across multiple nodes.

Control Manager: It ensures that nodes are up and running all the time as well as the correct number of Pods are running.

etcd: Distributed key-value lightweight database. Central database to store current cluster state at any point in time.

Components of the Worker Node

Kubelet: Primary Node agent that runs on each worker node inside the cluster. Ensures that containers described in that pod spec are running and healthy.

Kube-Proxy: Responsible for maintaining the entire network configuration. Also exposes services to the outside world.

Pods: A scheduling unit in Kubernetes. Like a virtual machine in the virtualization world. Each Pod consists of one or more containers.

Cluster

A Kubernetes cluster is a set of nodes that run containerized applications. They are more lightweight and flexible than virtual machines. In this way, clusters allow for applications to be more easily developed, moved, and managed.

Thank you so much for reading 💖

This is my very first blog and I hope you like it. There is always room for improvement and I'm looking forward to your reviews 😊

Do Like and Share this blog and follow me on Twitter

You can ping me on Twitter - twitter.com/anubhavjoshi040